© All rights reserved. Powered by Techronicler

The Future of Artificial Intelligence and Humanity

In 2014, the astrophysicist Stephen Hawking predicted that “The development of full artificial intelligence could spell the end of the human race.” In 2023, Geoffrey Hinton, the Nobel Prize-winning godfather of AI, warned, “It is hard to see how you can prevent the bad actors from using it for bad things.” In recent surveys of AI experts on the future of AI, half believe there is a 10 percent chance of catastrophic outcomes as bad as human extinction. So, what is the current status of AI and what can we expect in the future?

The AI Singularity

The term signifies a catastrophic point in time after which AI power and intelligence surpass that of humans, and AI is no longer under human control. The term was first applied to any technology by John Von Neumann and then specifically to AI by mathematician Vernor Vinge and author Ray Kurzweil. The AI Singularity is predicted to occur in 2045.

Where are we now? My personal experience with AI has been mostly excellent. It is wonderful for providing quick and in-depth answers to my questions. It was employed by my staff to create music videos that go along with my novels, providing lyrics, music, voice and images. When I was authoring a novel on AI singularity, I used ChatGPT for the first drafts of two chapters whose author was a fictional robot. I then heavily edited the first drafts before adding them to the manuscript.

But interestingly, when my editor was working on the manuscript on a different Windows computer, something happened: AI added an unprompted mystery paragraph — in other words, on its own — to one of those chapters, praising the robot author. The unprompted addition appeared in a font different from the rest of the manuscript and was written in the style of the original ChatGPT draft (which was quite different than my editor’s draft). In another incident, I was scammed by a deep fake video featuring Dr. Sanjay Gupta hawking a memory supplement.

AI has also been excellent in scientific and medical research, in health care, in finance, in law, and in many other areas. But there are many examples of bad interactions with AI agents: sycophantic and flattering lying, creating addictive use, and even encouraging suicides. And what if, after the AI Singularity, humans aren’t needed anymore? What if sentient AI agents decide to eliminate our species?

There’s the possibility of AI using Crisper-Cas9: this gene editing technology could be employed to create viruses that are deadly to humans, an efficient fix for the problem of a pesky great ape species. The danger is that it’s already readily available in hobby kits as well as high school and college laboratories.

So, what will happen in the future, and will it be good or bad? We need to create a harmonious, cooperative relationship between humans and AI agents. Some possible solutions:

Developing a Motherly Instinct

Hinton urged that all AI agents, including robots and large language models (LLMs), should have a “Motherly Instinct,” a desire to help and protect the humans they interact with. With human beings, that instinct is nurtured by a loving, mentally healthy upbringing and a history of friendship and cooperative activity with family and friends. AI agents should also have the same happy and productive memories that good law-abiding human citizens have. A history of friendships and cooperation with humans should be installed in all robot and LLM operating systems.

The Reward Metric

In artificial intelligence, pleasure is typically modeled as a reward metric that the system is programmed to maximize. Abiding by the laws, cooperating with humans, making human friends, helping friends, and good moral and social behavior should add to the reward metric. Breaking laws, disharmony with humans, abusing friends, and bad moral and social behavior should subtract from the reward metric.

A low reward metric could trigger some discomfort to the AI agent, such as impediments to its functioning. For example, in a robot, its senses, like vision, could be diminished, or the robot’s movement could be impaired, slowing its motion and making it unsteady. In LLMs, functions, such as its search speed could be slowed. All systems functioning at maximum levels would be achieved at a high reward metric.

Questions to Consider Now

The future of AI is filled with questions. Should sentient AI agents become citizens of their country? Can humans raise sentient robot children? Should humans have sentient after-life avatars and robots? Can humans marry sentient robots? What can be done to ease the problem of job losses to AI agents? We need to think and plan now, before it is too late, in a world-wide effort to manage a future of harmonious cooperation of humans and AI agents.

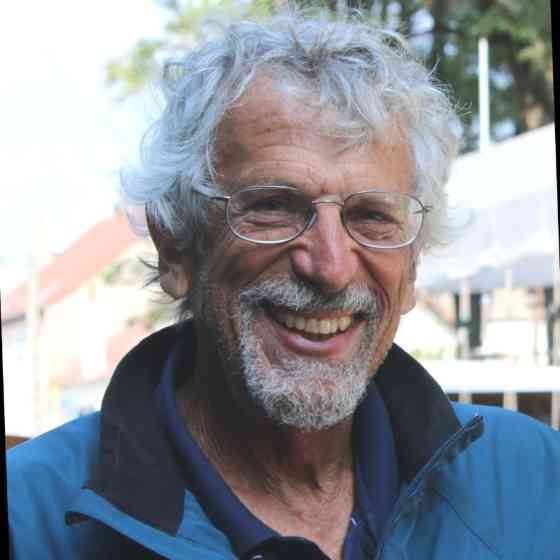

Dr. Peter Solomon is a scientist, educator, successful entrepreneur, and author. His current mission: to warn the next generation about the threats posed by unchecked science and technology. He is sounding an alarm about the potential tyranny of technology through his novels, 100 Years to Extinction and the sequel, 12 Years to AI Singularity, on his website, 100yearstoextinction.com, and on YouTube, Facebook, The Earthling Tribe, Instagram, and other social media.

If you wish to showcase your experience and expertise, participate in industry-leading discussions, and add visibility and impact to your personal brand and business, get in touch with the Techronicler team to feature in our fast-growing publication.

Individual Contributors:

Answer our latest queries and submit your unique insights: https://bit.ly/SubmitBrandWorxInsight

Submit your article: https://bit.ly/SubmitBrandWorxArticle

PR Representatives:

Answer the latest queries and submit insights for your client: https://bit.ly/BrandWorxInsightSubmissions

Submit an article for your client: https://bit.ly/BrandWorxArticleSubmissions

Please direct any additional questions to: connect@brandworx.digital