Algorithmic Accountability: Tech Leaders on Preventing Bias in Robotics

As machines increasingly mirror human capabilities—striding alongside us as humanoid companions or autonomously steering our journeys—what invisible boundaries must we draw to preserve dignity, equity, and safety in this brave new era?

Amidst the thrill of innovation, lingering shadows of surveillance, inequality amplification, and manipulative influence demand urgent scrutiny.

On Techronicler, visionary business leaders, insightful thought leaders, and seasoned tech professionals confront these pivotal dilemmas head-on, advocating for robust governance through independent audits and scenario simulations, stringent controls on silent data capture and decision transparency, equitable access to prevent social divides, and limits on autonomy to avoid exploitation of vulnerable groups.

Their collective wisdom underscores that ethical foresight isn’t optional—it’s the blueprint for technology that uplifts rather than undermines society.

From privacy safeguards to bias mitigation and public trust-building disclosures, these experts illuminate paths forward, challenging companies to prioritize humanity over haste.

Uncover how proactive measures can transform potential pitfalls into shared progress.

Read on!

Scenario Planning and Risk Disclosure Build Trust

Governance stands as the core foundation.

Leadership needs to obtain independent safety and privacy and financial risk evaluations which should guide their incentive systems toward building enduring public trust instead of pushing for fast deployment.

The assessment process stays up-to-date through scheduled audits which monitor system development.

Organizations need to use scenario planning as their ethical decision-making method.

Organizations need to conduct simulation exercises for accident responses and cyber incident management and system failure scenarios to identify operational weaknesses before deploying their systems.

The exercises help organizations detect operational weaknesses which become apparent only during normal operational activities.

Organizations need to maintain open disclosure about their risks.

Organizations that conceal their known system weaknesses at first might experience increased vulnerability during future operations.

The practice of open communication enables people to understand actual possibilities while safeguarding public welfare.

Equal Access Prevents Automation From Expanding Inequality

The main problem exists because automated systems now control the fundamental human communication systems.

Organizations need to establish which operations need automation but others need human involvement to stop society from losing its personal touch.

Operational efficiency together with social interaction enables communities to preserve their social bonds which maintain community function.

The problem of equal access to these services remains a major challenge.

The deployment of safe autonomous services which offer convenience to particular social groups creates conditions through which social inequality between different groups will expand.

The implementation of different access levels will distribute automated service advantages across various social groups.

Research on public interest protection requires scientists to analyze automation effects on social connections across different time spans instead of concentrating on short-term advantages.

The deployment of technology needs a planned method which forecasts upcoming changes in social frameworks and economic frameworks.

The method enables technology to establish stronger community ties instead of generating social segregation.

James Mikhail

Founder, Ikon Recovery

Data Capture and Manipulation Require Strict Controls

The first concern is silent data capture. Humanoid machines and autonomous vehicles use cameras and microphones and lidar and GPS technology to monitor faces and voices and track location patterns in public areas and semi-public spaces.

Organizations need to create straightforward privacy notices which explain data collection methods and storage duration and access permissions and establish procedures for users to opt out when feasible.

Privacy regulators currently handle location and biometric information with extreme caution because upcoming regulations will impose even more stringent requirements.

The requirement exists to provide explanations about all decision-making processes.

Operators need to understand the reasons behind robotic system decisions that result in access denial or route changes or safety impacts.

The system requires detailed logging systems and independent safety assessment procedures and model structures that enable both performance optimization and behavior inspection.

The risk of manipulation from these systems remains poorly understood by experts.

A humanoid platform which duplicates human appearance and speech patterns becomes highly effective at obtaining compliance from people.

The design team needs to conduct tests which evaluate how much influence these systems have on vulnerable groups including children and elderly people and those experiencing emotional distress.

The system should prevent persuasive functions from operating when users fall into specific risk categories.

Darryl Stevens

CEO & Founder, Digitech Web Design

Privacy by Design Protects Human Dignity

Humanoid robots and self-driving tech are beginning to enter our homes and streets.

Lots of challenges arise here, but the primary ethical challenge centers on who they really serve.

Machines that can see, move, and decide alongside humans need to be able to operate ethically.

This means that features such as local autonomy, encrypted data, and transparent intent need to be present.

Otherwise, we’re just inviting constant surveillance into our homes and not truly getting tech “helpers”.

In production and distribution, privacy can’t be an afterthought.

It needs to be a duty of any company producing humanoid robots so that our dignity and independence are protected.

Overtrust Hides True Technology Limits

People sometimes trust humanoid robots and self-driving tech a little too much. A lot of it comes from how smooth the design looks.

It gives the impression that the system can handle anything, even when it still has clear limits.

Most users do not see those limits right away.

And when something goes wrong, it is not always obvious who should take responsibility.

That is why these tools raise ethical questions.

Until companies explain more clearly what the technology can and cannot do, people might rely on it in situations where a human should still be paying attention.

Nisha Kakadia

Director & Head of Platform Strategy, Sociallyin

Transparency Ensures Safe, Accountable Innovation

With humanoid robots and self-driving systems becoming more present in daily life, the real ethical challenge is ensuring that innovation never moves faster than public safety.

Companies need to focus on transparency, explaining how their models make decisions, what data they rely on, and how they behave in unpredictable scenarios.

They also need clear accountability.

When an autonomous system fails, responsibility must be defined, not diluted.

Finally, continuous monitoring is essential to prevent bias and unsafe behavior, because an AI that learns from flawed data can make flawed decisions.

Trust is the foundation of adoption.

The companies that will lead this space are the ones capable of building technology that is safe, explainable, and aligned with human values, without cutting corners.

Sustainability Must Outpace AI Speed

The hardware race is only part of the story.

The real culprit is our obsession with performance over permanence.

We’ve built an AI economy addicted to speed, not sustainability where chips age faster than ideas.

Until efficiency and recyclability become as important as teraflops, e-waste will keep outpacing innovation.

AI hardware is a Frankenstein of rare metals, glued layers, and proprietary designs.

It wasn’t built to come apart. The irony is that we’ve engineered brilliance into computation but chaos into its afterlife.

We need circular design baked in from the chip level—recyclable by design, not by regret.

AI is both the arsonist and the firefighter in this story.

Robotic sorting and predictive logistics are promising, but they’re nibbling at the edges.

Until we make sustainability a design constraint not an afterthought.

AI will continue cleaning up a mess of its own making.

We need an AI-for-AI accountability loop where the same intelligence driving model training also measures its environmental debt.

Transparency dashboards, carbon-linked incentives, and global standards could turn sustainability from a cost center into a competitive edge.

The next great AI breakthrough might not be faster, it might simply waste less.

Anurag Gurtu

CEO, Airrived

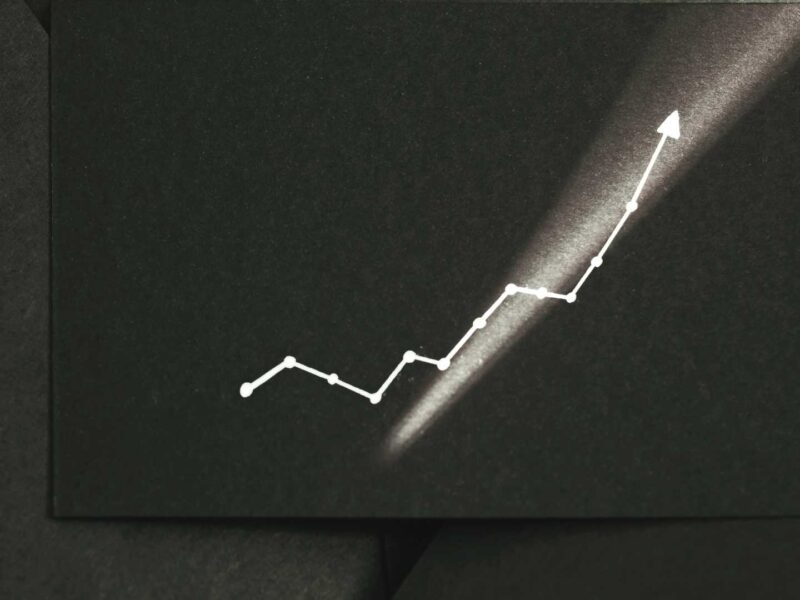

Circular Hardware Reverses E-Waste Trend

The short upgrade cycle of AI accelerators is a major driver of e waste, but the bigger problem is the lack of a mature circular pathway for recovering precious metals and specialty materials at scale.

As circular systems become mainstream and reused GPUs find second life in lower tier workloads, the waste curve can flatten.

AI hardware is complex, but it contains valuable metals and engineered materials that absolutely can be recovered once processes become economically optimized.

We are moving toward automated disassembly, robotic separation, and material harvesting that will make recycling both efficient and profitable.

AI powered recycling will eventually outpace the waste it creates because automation dramatically reduces the cost of recovery.

As reuse and remanufacturing of older GPUs becomes normal, and as AI routing systems reduce landfill leakage, the net environmental burden can be reversed.

We need a policy that encourages circular design, extended producer responsibility, and incentives for reused hardware in non critical workloads.

At the same time AI driven recycling infrastructure must scale so that recovering materials becomes cheaper than mining them, which is the economic tipping point for sustained global change.

Kevin Surace

CEO, Appvance

Limit Autonomy, Demand Human Oversight

When developing humanoid robots and self-driving tech, it is imperative to not only impose limits on their autonomy but to ensure that those who interact with them have an understanding of those limits and that they are subject to human oversight.

The programming of self-driving cars should be transparent to riders, authorities and the public, so it is understood how vehicles will respond in a crash and whether they prioritise the interests of passengers, passersby or aim to reduce overall harm and be non-discriminatory in doing so.

With humanoid robots, while developers have an interest in them appearing unthreatening and there are potential therapeutic use cases for humanoid robots which may be advanced by them being perceived as companions, their facade should not replicate human appearance, which could alter humans’ relationship to and perception of them and risk vulnerable individuals being exploited.

Nicola Cain

CEO & Principal Consultant, Handley Gill Limited

On behalf of the Techronicler community of readers, we thank these leaders and experts for taking the time to share valuable insights that stem from years of experience and in-depth expertise in their respective niches.

If you wish to showcase your experience and expertise, participate in industry-leading discussions, and add visibility and impact to your personal brand and business, get in touch with the Techronicler team to feature in our fast-growing publication.

Individual Contributors:

Answer our latest queries and submit your unique insights:

https://bit.ly/SubmitBrandWorxInsight

Submit your article:

https://bit.ly/SubmitBrandWorxArticle

PR Representatives:

Answer the latest queries and submit insights for your client:

https://bit.ly/BrandWorxInsightSubmissions

Submit an article for your client:

https://bit.ly/BrandWorxArticleSubmissions

Please direct any additional questions to: connect@brandworx.digital